How to Upload Files to Google Collab

by Bharath Raj

How to Upload large files to Google Colab and remote Jupyter notebooks

If you haven't heard most information technology, Google Colab is a platform that is widely used for testing out ML prototypes on its free K80 GPU. If yous take heard about it, chances are that yous gave it shot. Merely you might take become exasperated because of the complication involved in transferring large datasets.

This web log compiles some of the methods that I've establish useful for uploading and downloading large files from your local system to Google Colab. I've also included additional methods that tin useful for transferring smaller files with less effort. Some of the methods tin be extended to other remote Jupyter notebook services, like Paperspace Slope.

Transferring Large Files

The most efficient method to transfer big files is to utilize a cloud storage organization such every bit Dropbox or Google Drive.

one. Dropbox

Dropbox offers upto 2GB free storage space per account. This sets an upper limit on the corporeality of information that you can transfer at any moment. Transferring via Dropbox is relatively easier. Yous tin can also follow the same steps for other notebook services, such as Paperspace Gradient.

Step 1: Annal and Upload

Uploading a large number of images (or files) individually will take a very long time, since Dropbox (or Google Drive) has to individually assign IDs and attributes to every image. Therefore, I recommend that yous archive your dataset starting time.

1 possible method of archiving is to convert the folder containing your dataset into a '.tar' file. The code snippet below shows how to convert a folder named "Dataset" in the home directory to a "dataset.tar" file, from your Linux last.

tar -cvf dataset.tar ~/Dataset Alternatively, y'all could use WinRar or 7zip, whatever is more convenient for y'all. Upload the archived dataset to Dropbox.

Stride 2: Clone the Repository

Open up Google Colab and beginning a new notebook.

Clone this GitHub repository. I've modified the original code so that it can add the Dropbox admission token from the notebook. Execute the following commands one by one.

!git clone https://github.com/thatbrguy/Dropbox-Uploader.git cd Dropbox-Uploader !chmod +x dropbox_uploader.sh Step three: Create an Access Token

Execute the following control to come across the initial setup instructions.

!fustigate dropbox_uploader.sh It will display instructions on how to obtain the admission token, and will inquire y'all to execute the following command. Replace the bold letters with your admission token, and then execute:

!echo "INPUT_YOUR_ACCESS_TOKEN_HERE" > token.txt Execute !fustigate dropbox_uploader.sh again to link your Dropbox account to Google Colab. At present you can download and upload files from the notebook.

Stride 4: Transfer Contents

Download to Colab from Dropbox:

Execute the post-obit control. The argument is the proper name of the file on Dropbox.

!bash dropbox_uploader.sh download YOUR_FILE.tar Upload to Dropbox from Colab:

Execute the following command. The first argument (result_on_colab.txt) is the proper name of the file you want to upload. The second argument (dropbox.txt) is the name you want to save the file every bit on Dropbox.

!bash dropbox_uploader.sh upload result_on_colab.txt dropbox.txt 2. Google Bulldoze

Google Bulldoze offers upto 15GB free storage for every Google business relationship. This sets an upper limit on the amount of information that y'all can transfer at any moment. You tin always expand this limit to larger amounts. Colab simplifies the hallmark process for Google Drive.

That being said, I've also included the necessary modifications you can perform, and so that you can access Google Drive from other Python notebook services as well.

Footstep 1: Archive and Upload

Just as with Dropbox, uploading a large number of images (or files) individually volition take a very long time, since Google Drive has to individually assign IDs and attributes to every prototype. And so I recommend that yous archive your dataset starting time.

One possible method of archiving is to convert the binder containing your dataset into a '.tar' file. The code snippet beneath shows how to catechumen a binder named "Dataset" in the home directory to a "dataset.tar" file, from your Linux last.

tar -cvf dataset.tar ~/Dataset And again, you tin employ WinRar or 7zip if you prefer. Upload the archived dataset to Google Drive.

Stride 2: Install dependencies

Open Google Colab and start a new notebook. Install PyDrive using the following control:

!pip install PyDrive Import the necessary libraries and methods (The assuming imports are only required for Google Colab. Exercise not import them if you're not using Colab).

import os from pydrive.auth import GoogleAuth from pydrive.drive import GoogleDrive from google.colab import auth from oauth2client.customer import GoogleCredentials Footstep three: Authorize Google SDK

For Google Colab:

Now, you have to authorize Google SDK to access Google Drive from Colab. First, execute the following commands:

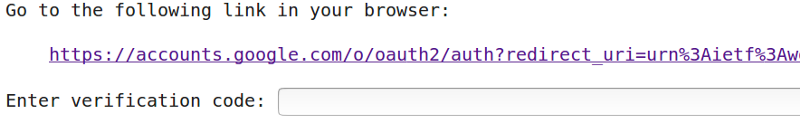

auth.authenticate_user() gauth = GoogleAuth() gauth.credentials = GoogleCredentials.get_application_default() bulldoze = GoogleDrive(gauth) You will get a prompt as shown below. Follow the link to obtain the central. Copy and paste it in the input box and press enter.

For other Jupyter notebook services (Ex: Paperspace Gradient):

Some of the following steps are obtained from PyDrive's quickstart guide.

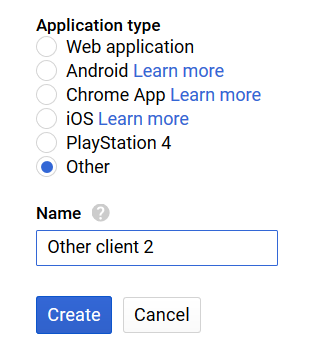

Go to APIs Console and make your own project. Then, search for 'Google Bulldoze API', select the entry, and click 'Enable'. Select 'Credentials' from the left menu, click 'Create Credentials', select 'OAuth client ID'. You should see a carte du jour such as the image shown beneath:

Set up "Application Type" to "Other". Give an appropriate name and click "Salvage".

Download the OAuth 2.0 client ID you just created. Rename it to client_secrets.json

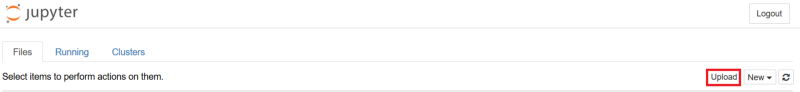

Upload this JSON file to your notebook. You can do this by clicking the "Upload" button from the homepage of the notebook (Shown Beneath). (Note: Practice non employ this button to upload your dataset, as it will be extremely fourth dimension consuming.)

Now, execute the following commands:

gauth = GoogleAuth() gauth.CommandLineAuth() drive = GoogleDrive(gauth) The rest of the process is similar to that of Google Colab.

Step iv: Obtain your File's ID

Enable link sharing for the file you want to transfer. Re-create the link. You may get a link such as this:

https://bulldoze.google.com/open?id=YOUR_FILE_ID Copy only the assuming role of the higher up link.

Stride five: Transfer contents

Download to Colab from Google Bulldoze:

Execute the post-obit commands. Here, YOUR_FILE_ID is obtained in the previous step, and DOWNLOAD.tar is the name (or path) you want to save the file as.

download = drive.CreateFile({'id': 'YOUR_FILE_ID'}) download.GetContentFile('DOWNLOAD.tar') Upload to Google Drive from Colab:

Execute the following commands. Here, FILE_ON_COLAB.txt is the name (or path) of the file on Colab, and DRIVE.txt is the name (or path) you want to relieve the file as (On Google Bulldoze).

upload = drive.CreateFile({'title': 'DRIVE.txt'}) upload.SetContentFile('FILE_ON_COLAB.txt') upload.Upload() Transferring Smaller Files

Occasionally, you lot may want to laissez passer just one csv file and don't want to go through this entire hassle. No worries — there are much simpler methods for that.

1. Google Colab files module

Google Colab has its inbuilt files module, with which you can upload or download files. You can import it by executing the following:

from google.colab import files To Upload:

Use the following command to upload files to Google Colab:

files.upload() You will be presented with a GUI with which you can select the files you want to upload. It is not recommended to utilise this method for files of large sizes. It is very dull.

To Download:

Apply the following control to download a file from Google Colab:

files.download('example.txt') This feature works all-time in Google Chrome. In my experience, it only worked once on Firefox, out of nigh 10 tries.

2. GitHub

This is a "hack-ish" mode to transfer files. Yous can create a GitHub repository with the modest files that you want to transfer.

In one case you create the repository, you can just clone it in Google Colab. You can then push your changes to the remote repository and pull the updates onto your local system.

Just practice note that GitHub has a hard limit of 25MB per file, and a soft limit of 1GB per repository.

Thanks for reading this article! Leave some claps if yous it interesting! If you have any questions, you could hit me upwardly on social media or ship me an email (bharathrajn98[at]gmail[dot]com).

Acquire to code for free. freeCodeCamp'southward open source curriculum has helped more than than 40,000 people get jobs every bit developers. Get started

Source: https://www.freecodecamp.org/news/how-to-transfer-large-files-to-google-colab-and-remote-jupyter-notebooks-26ca252892fa/

Post a Comment for "How to Upload Files to Google Collab"